In order to interact with the world, your brain constructs and maintains internal representations of it. Our aim is to understand the nature of these representations and the computations performed on them to achieve behavioural goals. In the lab, we use visual psychophysics and memory tasks, extended reality (XR) technology, eye and body movement recordings, mathematical models and artificial neural networks. We also collaborate with researchers using brain imaging, recording and stimulation, and with neuropsychologists who study cognitive aging, mental illness and neurological disorders.

A major focus of the lab is on visual working memory. Our ability to recall details of what we have just seen is remarkably limited: rather than a maximum number of objects we can remember, our work has shown that the limit is on the total resolution with which visual information can be maintained. Visual memory acts like a resource that can be allocated to important information in our environment: we investigate how this resource is distributed between features of the visual scene and the ways in which this affects our perception, decisions and actions.

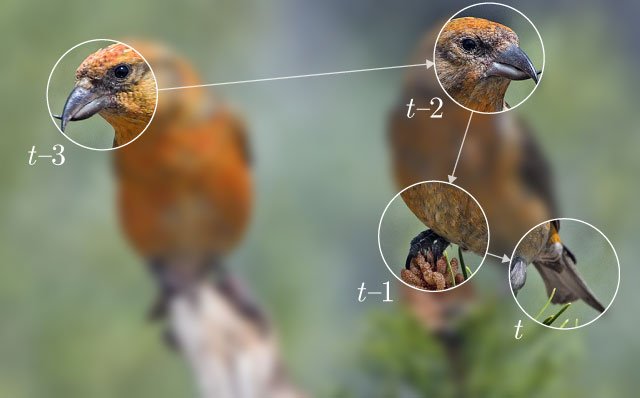

In everyday life, we redirect our gaze several times per second in order to extract detailed information from the world around us. These shifts of visual attention represent a simple case of exploration and decision-making behaviour. Our research has shown that working memory plays a vital role in bridging discrete transitions in visual input, so that processing does not have to begin anew after each eye movement.

In the brain, information about our environment and our planned actions is encoded in the joint spiking activity of populations of neurons. We develop models based on neural coding principles to identify mechanisms that are compatible with our experimental observations of human perception and behaviour. We also have long-standing interests in sensory prediction and motor learning, particularly in relation to tactile attenuation.

Joining the lab

To apply for an internship, MPhil or PhD degree in the Bays lab, please complete this form. If you are interested in a post-doctoral position please contact me by email.